Facebook has been making efforts to not only provide people with credible information about COVID 19, but also stop misinformation spread. In its latest attempt, the company announced that it will notify people who might have come in contact with false news about the virus through Facebook’s main app.

The announcement was made by a blog post on Facebook Newsroom, where the company also reminded that it is working with “60 fact-checking organizations that review and rate content in more than 50 languages around the world.” The company will use its ties to figure out fake news on the platform, and notify people who have “liked, reacted or commented on harmful misinformation about COVID-19”.

“These messages will connect people to COVID-19 myths debunked by the WHO including ones we’ve removed from our platform for leading to imminent physical harm. We want to connect people who may have interacted with harmful misinformation about the virus with the truth from authoritative sources in case they see or hear these claims again off of Facebook,” the blog post said.

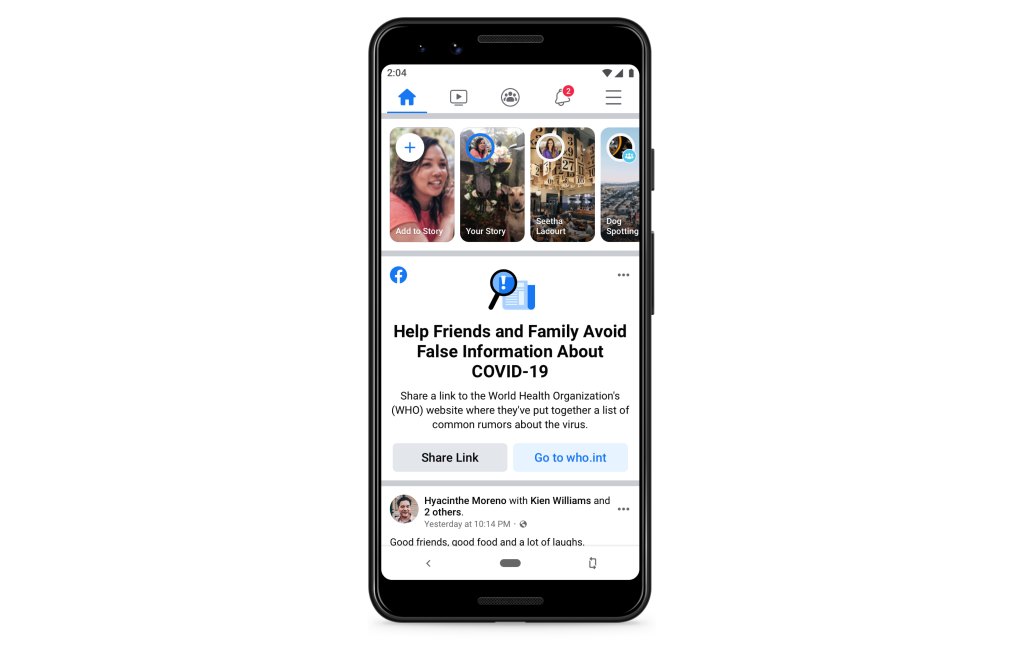

People will start seeing this prompt on their Facebook feed soon:

Due to fear and panic, people have been falling prey to blatant lies about the virus. A rumour about coronavirus spreading through 5G internet is one of those obvious examples. Another one is “the virus dying in sunlight or temperature over 25C degrees”. While these seem physically harmless, they can lead to a state of panic among people. Others, like drinking bleach as a means of prevention from the virus, are not so innocent either.

The company has partnered with multiple fact checking organisations around the world, and has even started a $1 million grant program. The company also announced the first recipients of this grant, which includes 13 entities from “around the world to support projects in Italy, Spain, Colombia, India, the Republic of Congo, and other nations.”

Facebook, once it comes in contact with such fake news that is flagged by its fact checkers, reduces its distribution and shows warning labels with more context. The company was able to provide warning on about 40 million posts related to COVID-19 on Facebook in the month of March.

The Tech Portal is published by Blue Box Media Private Limited. Our investors have no influence over our reporting. Read our full Ownership and Funding Disclosure →